1、一些与RK的NPU相关的命令

# 查看npu驱动的版本号

cat /sys/kernel/debug/rknpu/version

# 查看rk芯片的npu的占用率

cat /sys/kernel/debug/rknpu/load

#实时显示该监控过程

watch -d "cat /sys/kernel/debug/rknpu/load"

# 为rk3588的npu设置定频(高性能)

echo performance | tee $(find /sys/ -name *governor)2、安装git lfs并拉取deepseek模型

curl -s https://packagecloud.io/install/repositories/github/git-lfs/script.deb.sh | sudo bash

apt-get install git-lfs

systemctl restart unattended-upgrades.service

git lfs install

git lfs clone ......拉取deepseek的模型

# 1.5b

git lfs clone https://www.modelscope.cn/deepseek-ai/DeepSeek-R1-Distill-Qwen-1.5B.git

#7b

git lfs clone https://www.modelscope.cn/deepseek-ai/DeepSeek-R1-Distill-Qwen-7B.git注意:

3、安装conda

wget https://repo.anaconda.com/archive/Anaconda3-2024.10-1-Linux-aarch64.sh

chmod u+x Anaconda3-2024.10-1-Linux-aarch64.sh

# 指定安装目录

./Anaconda3-2024.10-1-Linux-aarch64.sh -b -p /sata/lyqiao/anaconda3如果你的.bashrc中没有添加进相关配置,参考以下配置

# ~/.bashrc: executed by bash(1) for non-login shells.

# Note: PS1 and umask are already set in /etc/profile. You should not

# need this unless you want different defaults for root.

# PS1='${debian_chroot:+($debian_chroot)}\h:\w\$ '

# umask 022

# You may uncomment the following lines if you want `ls' to be colorized:

# export LS_OPTIONS='--color=auto'

# eval "$(dircolors)"

# alias ls='ls $LS_OPTIONS'

# alias ll='ls $LS_OPTIONS -l'

# alias l='ls $LS_OPTIONS -lA'

#

# Some more alias to avoid making mistakes:

# alias rm='rm -i'

# alias cp='cp -i'

# alias mv='mv -i'

export LC_ALL=zh_CN.UTF-8

export LANG=zh_CN.UTF-8

export LANGUAGE=zh_CN.UTF-8

# >>> conda initialize >>>

# !! Contents within this block are managed by 'conda init' !!

__conda_setup="$('/home/linaro/anaconda3/bin/conda' 'shell.bash' 'hook' 2> /dev/null)"

if [ $? -eq 0 ]; then

eval "$__conda_setup"

else

if [ -f "/home/linaro/anaconda3/etc/profile.d/conda.sh" ]; then

. "/home/linaro/anaconda3/etc/profile.d/conda.sh"

else

export PATH="/home/linaro/anaconda3/bin:$PATH"

fi

fi

unset __conda_setup

# <<< conda initialize <<<

PATH=$PATH:/home/linaro/.local/bin

export PATH

# Created by `pipx` on 2025-02-13 09:47:18

export PATH="$PATH:/home/linaro/.local/bin"

4、创建rkllm的python虚拟环境

source ~/.bashrc

conda create --prefix /data/modules/rkllm python=3.10.16

conda activate /data/modules/rkllm5、模型量化

(1)下载RK提供大模型转换工具rknn-llm并安装依赖

https://github.com/airockchip/rknn-llm

https://codeload.github.com/airockchip/rknn-llm/tar.gz/refs/tags/release-v1.1.4注:RKNN-LLM 是将大语言模型(LLM)适配到 RKNN 平台上运行的解决方案。由于 LLM 通常需要大量的计算资源(如 GPU 和内存),而 RKNN 平台主要面向边缘计算设备,因此 RKNN-LLM 的目标是通过量化和优化技术,将 LLM 部署到资源受限的设备上,实现本地化的 AI 推理。

tar zxvf rknn-llm-release-v1.1.4.tar.gz -C /data/modules/

cd rknn-llm-release-v1.1.4/

cd /data/modules/rknn-llm-release-v1.1.4/rkllm-toolkit/packages

pip install rkllm_toolkit-1.1.4-cp310-cp310-linux_x86_64.whl -i https://pypi.tuna.tsinghua.edu.cn/simple注意:

- rkllm_toolkit-1.1.4-cp310这个文件要根据你的python版本来选择,上面conda的时候选择的python的版本是3.10.16,因此选择rkllm_toolkit-1.1.4-cp310-cp310-linux_x86_64.whl。

- 如果使用python3.8.x,就要选择rkllm_toolkit-1.1.4-cp38-cp38-linux_x86_64.whl。

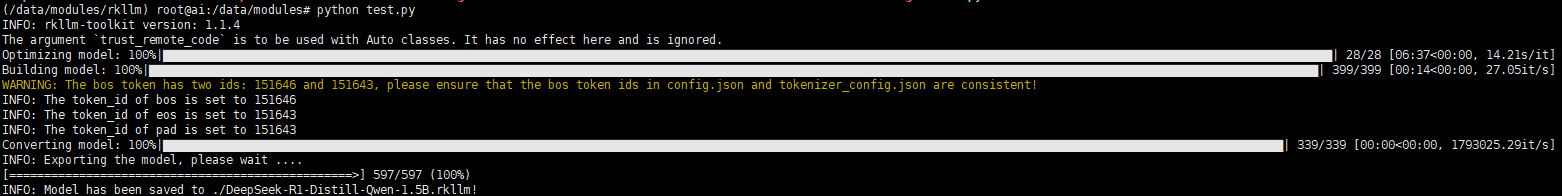

(2)量化模型

更改转换脚本test.py

# vim /data/modules/rknn-llm-release-v1.1.4/rkllm-toolkit/examples/test.py

# 注意这里的DeepSeek-R1-Distill-Qwen-1.5B就是上面【1】中在git上拉取的模型所在路径

modelpath = '/data/modules/DeepSeek-R1-Distill-Qwen-1.5B'

dataset = None

#生成的模型存放路径

ret = llm.export_rkllm("./DeepSeek-R1-Distill-Qwen-1.5B.rkllm")注:也可使用4bit进行量化,只是效果会受到很大的影响【w4a16】

执行转换命令

cd /data/modules/rknn-llm-release-v1.1.4/rkllm-toolkit/examples

python test.py

6、编译二进制文件

安装arm交叉编译工具链

wget https://armkeil.blob.core.windows.net/developer/Files/downloads/gnu-a/10.2-2020.11/binrel/gcc-arm-10.2-2020.11-x86_64-aarch64-none-linux-gnu.tar.xz

tar xvf gcc-arm-10.2-2020.11-x86_64-aarch64-none-linux-gnu.tar.xz -C /data/soft加到环境变量path中

# vim /etc/profile

PATH=$PATH:/data/soft/gcc-arm-10.2-2020.11-x86_64-aarch64-none-linux-gnu/bin

export PATH更改编译文件

# vim /data/modules/rknn-llm-release-v1.1.4/examples/rkllm_api_demo/src/llm_demo.cpp

//添加下面两行

#define PROMPT_TEXT_PREFIX "<|im_start|>system\nYou are a helpful assistant.\n<|im_end|>\n<|im_start|>user\n"

#define PROMPT_TEXT_POSTFIX "\n<|im_end|>\n<|im_start|>assistant\n<think>"

//注释掉原有的text = input_str,更改成下文

text = PROMPT_TEXT_PREFIX + input_str + PROMPT_TEXT_POSTFIX;注:为什么要修改 PROMPT_TEXT_PREFIX 和 PROMPT_TEXT_POSTFIX? 这里需要参考 DeepSeek-R1 论文中 Table1 的说明,需按照其格式对 DeepSeek-R1 模型提问。

更改编译脚本

# vim /data/modules/rknn-llm-release-v1.1.4/examples/rkllm_api_demo/build-linux.sh

GCC_COMPILER_PATH=/data/soft/gcc-arm-10.2-2020.11-x86_64-aarch64-none-linux-gnu/bin/aarch64-none-linux-gnu运行编译命令

apt install cmake

cd /data/modules/rknn-llm-release-v1.1.4/examples/rkllm_api_demo

bash build-linux.sh生成的可执行文件在 build/build_linux_aarch64_Release/llm_demo

7、拷贝llm_demo及量化后的模型到rk3588的设备中

# 在rk3588的设备上创建一个目录,用于存放相关的文件

mkdir dsnpu

# RK模型共享库

scp -r root@192.168.58.41:/data/modules/rknn-llm-release-v1.1.4/rkllm-runtime/Linux/librkllm_api/aarch64 ./

# 编译后的二进制可执行文件

scp -r root@192.168.58.41:/data/modules/rknn-llm-release-v1.1.4/examples/rkllm_api_demo/build/build_linux_aarch64_Release/llm_demo ./

# 量化后的模型

scp root@192.168.58.41:/data/modules/DeepSeek-R1-Distill-Qwen-1.5B.rkllm ./8、运行

# 设置环境变量

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/sata/ai/aarch64

export RKLLM_LOG_LEVEL=1

# 为rk3588的npu设置定频(高性能)

echo performance | tee $(find /sys/ -name *governor)

chmod u+x llm_demo

./llm_demo DeepSeek-R1-Distill-Qwen-1.5B.rkllm 10000 10000

9、测试

curl -X POST http://172.18.17.201:8089/api/chat \

-H "Content-Type: application/json" \

-d '{

"model": "deepseek-r1:1.5b",

"messages": [

{

"role": "user",

"content": "你好"

}

]

}'

curl -X POST http://172.18.17.200:18080/light-app/open-api/1889571663627751425/api/chat \

-H "Content-Type: application/json" \

-d '{

"model": "deepseek-r1:1.5b",

"messages": [

{

"role": "user",

"content": "你好"

}

]

}'

curl -X POST http://172.18.17.200:8089/api/chat \

-H "Content-Type: application/json" \

-d '{

"model": "deepseek-r1:1.5b",

"messages": [

{

"role": "user",

"content": "你好"

}

]

}'

curl -X POST http://172.18.17.200:18080/light-app/open-api/1889586140679049217/api/chat \

-H "Content-Type: application/json" \

-d '{

"model": "deepseek-r1:1.5b",

"messages": [

{

"role": "user",

"content": "你好"

}

]

}'

curl -X POST -d '{model: "deepseek-r1:1.5b", messages: [{role: "user", content: "你好"}]}' 'http://172.18.17.200:18080/light-app/open-api/1889586140679049217/api/chat'

curl -X POST https://fm.aolingo.com/api/chat \

-H "Content-Type: application/json" \

-d '{

"model": "deepseek-r1:1.5b",

"messages": [

{

"role": "user",

"content": "你好"

}

]

}'