一、系统优化

1、转发ipv4,并让iptables看到桥接流量

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

overlay

br_netfilter

EOF

sudo modprobe overlay

sudo modprobe br_netfilter

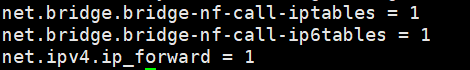

# 设置所需要的sysctl参数,并在重启后保持其参数生效

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

EOF

# 不重启使sysctl参数生效

sudo sysctl --system

#查看结果

lsmod | grep br_netfilter

lsmod | grep overlaysysctl net.bridge.bridge-nf-call-iptables net.bridge.bridge-nf-call-ip6tables net.ipv4.ip_forward验证net.bridge.bridge-nf-call-iptables, net.bridge.bridge-nf-call-ip6tables, net.ipv4.ip_forward这三个系统变量是否已经在你的sysctl中。

2、关闭swap分区

swapoff -a && sed -i '/swap/d' /etc/fstab二、全部节点安装kubernetes及crio

初始三个主节点,初始化的过程是一样的。注意根据自己的主机情况修改对应的ip地址等等。

1、安装kubernetes

curl -s https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | sudo apt-key add -

tee /etc/apt/sources.list.d/kubernetes.list <<EOF

deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main

EOF

apt update -y && \

apt -y install vim git curl wget kubelet=1.26.4-00 kubeadm=1.26.4-00 kubectl=1.26.4-00 kubernetes-cni2、安装cri-o

参建文档《kubernetes—cri-o的使用》

更改crio的配置文件,修改pause的镜像源,否则会导致6443连接拒绝的错误。

$vim /etc/crio/crio.conf

[crio.image]

insecure_registries = ["registry.cn-hangzhou.aliyuncs.com"]

pause_image = "registry.cn-hangzhou.aliyuncs.com/qly-k8s/pause:3.9"

[crio.runtime]

conmon = "/usr/bin/conmon"修改完成后需要重启crio服务

systemctl restart crio3、配置主机hosts

如果不配置,则会报如下错误:Error getting node” err=”node \”k8s1\” not found

127.0.0.1 localhost

127.0.1.1 k8s1

# The following lines are desirable for IPv6 capable hosts

::1 ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

192.168.2.24 k8s1

192.168.2.25 k8s2

192.168.2.26 k8s3

192.168.2.27 work1

192.168.2.28 work2

192.168.2.29 work3

三、初始化主节点1

1、创建节点初始化配置文件

(1)、导出kubeadm的默认配置

kubeadm config print init-defaults > kubeadm-init-config.yaml(2)、修改其配置文件

apiVersion: kubeadm.k8s.io/v1beta3

kind: ClusterConfiguration

kubernetesVersion: stable

apiServerCertSANs:

- 192.168.2.25

controlPlaneEndpoint: "192.168.2.25:6443"

etcd:

external:

endpoints:

- https://192.168.2.21:2379

- https://192.168.2.22:2379

- https://192.168.2.23:2379

caFile: /etc/etcd/cert/ca.pem

certFile: /etc/etcd/cert/kubernetes.pem

keyFile: /etc/etcd/cert/kubernetes-key.pem

imageRepository: registry.cn-hangzhou.aliyuncs.com/qly-k8s

kubernetesVersion: v1.26.4

networking:

podSubnet: 10.30.0.0/24

apiServerExtraArgs:

apiserver-count: "3"

2、初始化节点

kubeadm init --config kubeadm-config.yaml一定要记录好打印出来的kubead join命令,后续添加工作节点时需要该命令。

如果遇到初始化节点失败,可以对节点进行reset后重新初始化。

kubeadm reset3、拷贝认证文件到其他两个主节点

scp -r /etc/kubernetes/pki/ k8s2:~/

scp -r /etc/kubernetes/pki/ k8s3:~/三、初始化主节点2

1、删除apiserver.crt及apiserver.key

rm ~/pki/apiserver.*2、移动证书到/etc/kubernetes目录

mv ~/pki/ /etc/kubernetes/3、配置文件如下

apiVersion: kubeadm.k8s.io/v1beta3

kind: ClusterConfiguration

kubernetesVersion: stable

apiServerCertSANs:

- 192.168.2.26

controlPlaneEndpoint: "192.168.2.26:6443"

etcd:

external:

endpoints:

- https://192.168.2.21:2379

- https://192.168.2.22:2379

- https://192.168.2.23:2379

caFile: /etc/etcd/cert/ca.pem

certFile: /etc/etcd/cert/kubernetes.pem

keyFile: /etc/etcd/cert/kubernetes-key.pem

imageRepository: registry.cn-hangzhou.aliyuncs.com/qly-k8s

kubernetesVersion: v1.26.4

networking:

podSubnet: 10.30.0.0/24

apiServerExtraArgs:

apiserver-count: "3"4、初始化节点

kubeadm init --config kubeadm-config.yaml这里打印出来的kubead join命令和初始化第一个节点产生的命令是一样的,这说明新创建的k8s主节点与原有的主节点在处于一个集群中。

四、初始化主节点三

1、删除apiserver.crt及apiserver.key

rm ~/pki/apiserver.*2、移动证书到/etc/kubernetes目录

mv ~/pki/ /etc/kubernetes/3、配置文件如下

apiVersion: kubeadm.k8s.io/v1beta3

kind: ClusterConfiguration

kubernetesVersion: stable

apiServerCertSANs:

- 192.168.2.27

controlPlaneEndpoint: "192.168.2.27:6443"

etcd:

external:

endpoints:

- https://192.168.2.21:2379

- https://192.168.2.22:2379

- https://192.168.2.23:2379

caFile: /etc/etcd/cert/ca.pem

certFile: /etc/etcd/cert/kubernetes.pem

keyFile: /etc/etcd/cert/kubernetes-key.pem

imageRepository: registry.cn-hangzhou.aliyuncs.com/qly-k8s

kubernetesVersion: v1.26.4

networking:

podSubnet: 10.30.0.0/24

apiServerExtraArgs:

apiserver-count: "3"4、初始化节点

kubeadm init --config kubeadm-config.yaml这里打印出来的kubead join命令和初始化第一个节点产生的命令是一样的,这说明新创建的k8s主节点与原有的主节点在处于一个集群中。

五、添加工作节点

在任意的主节点初始化的过程中,都会产生对应的子节点添加指令,所有主机节点输出的信息是一样的,记录下一份即可,如:

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join 192.168.2.24:6443 --token jvpkho.y6tdcdi9jxpprjw6 \

--discovery-token-ca-cert-hash sha256:e4385aa9cc9df2a81b5ef59680369c1051a284195995afa91f8e1e5565044a58 \

--control-plane

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.2.24:6443 --token jvpkho.y6tdcdi9jxpprjw6 \

--discovery-token-ca-cert-hash sha256:e4385aa9cc9df2a81b5ef59680369c1051a284195995afa91f8e1e5565044a58 下面的一行是添加工作节点的命令代码,分别在每个工作节点中执行上述代码即可。

六、配置haproxy

1、安装haproxy

apt install haproxy2、修改配置文件

$cd /etc/haproxy/

$cp haproxy.cfg haproxy.cfg.bak

$vim haproxy.cfg

frontend kubernetes

bind 192.168.2.20:6443

option tcplog

mode tcp

default_backend kubernetes-master-nodes

backend kubernetes-master-nodes

mode tcp

balance roundrobin

option tcp-check

server k8s-master-0 192.168.2.24:6443 check fall 3 rise 2

server k8s-master-1 192.168.2.25:6443 check fall 3 rise 2

server k8s-master-2 192.168.2.26:6443 check fall 3 rise 2

3、启动服务

systemctl enable haprxy

systemctl restart haproxy

systemctl status haproxy

配置客户端访问

记录下其他节点添加的代码,其他的节点使用下面的命令添加到集群。

使用命令查看节点启动情况:

kubectl --kubeconfig ~/.kube/admin.conf get nodes3、配置客户端环境

下例中的客户端环境是k8s的初始化节点,如果不是初始化节点,需要使用scp从初始节点中的admin.conf文件拷贝到客户端。

mkdir -p $HOME/.kube && \

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config && \

chown $(id -u):$(id -g) $HOME/.kube/config查看节点情况:

kubectl get nodes二、安装haproxy节点

1、安装

apt-get install haproxy2、配置

vim /etc/haproxy/haproxy.cfg

frontend kubernetes

bind 192.168.2.20:6443

option tcplog

mode tcp

default_backend kubernetes-master-nodes

backend kubernetes-master-nodes

mode tcp

balance roundrobin

option tcp-check

server k8s-master-0 192.168.2.21:6443 check fall 3 rise 2

server k8s-master-1 192.168.2.22:6443 check fall 3 rise 2

server k8s-master-2 192.168.2.23:6443 check fall 3 rise 2启动服务

systemctl restart haproxy